Facial Recognition in Court: Protecting the Innocent Or Wrongfully Arresting Them?

We’ve been hearing a lot lately that Facial recognition technology is biased and inaccurate, and therefore leading to wrongful arrests. I decided it was time to take a deep dive to see what is wrong with the technology I’ve been developing and selling for more than a decade.

I started my facial recognition company Face-Six in 2012, and although I was ahead of the curve when AI started booming back in 2015, the fierce competition in the market eventually forced me to find new market niches as an additional source of income. That included developing facial recognition software for churches to track members’ attendance, for schools to track students’ attendance, and for paramilitary drone units to track terrorists and riot starters. In addition, I started providing expert opinions for the court, based on my facial recognition technology.

Now you might be wondering, why would anyone need a facial recognition expert witness in court?

Well, as it turns out, the police often rely in court on the testimony of an eyewitness who identifies a suspect, not at the crime scene, but in a photo or video taken from the crime scene. In other cases, the police may not even rely on an eyewitness at all, and instead, identify the suspect by themselves directly from the CCTV camera footage at the crime scene.

More often than not, the quality of the CCTV video footage is not very good, leaving room for interpretation and false accusations. And this is when the phone rings. On the other side of the line are defense lawyers, including from the Israeli Public Defender’s Office, who want to acquit their clients who claim to be wrongfully identified by the police.

Now you might be wondering again. If we keep hearing that facial recognition is inaccurate and biased, how can the technology be relied upon in court?

Well, I confess that for a long period I didn’t care much about the answer. Facial recognition’s AI models were getting better and better, their accuracy was reaching new heights (with numerous algorithms achieving more than 99.9% accuracy in the latest FRTE Mugshot test), and the lawyers were happy. And all the talk in the media about the issues that the technology raises sounded a bit like mumbo-jumbo to me…

However, after several cases of wrongful arrests involving facial recognition started piling up, I couldn’t ignore it anymore.

I had to reconcile the apparent contradiction. How on earth is it possible that the same technology that is helping to acquit wrongfully identified defendants in court is also involved in wrongfully arresting others?

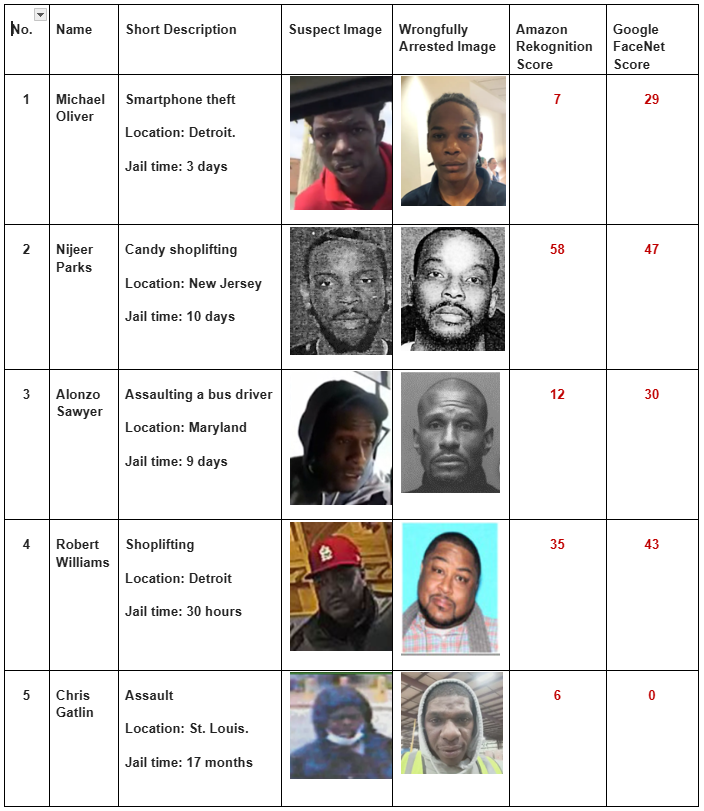

So, I took a deep dive and started investigating. I found out that there were at least eight known cases of wrongful arrests involving facial recognition in the past few years, and I was able to obtain the images that led to five of them.

Image Pair Matching

In order to understand what was wrong with the technology, I decided to match the image pair from each of the 5 cases using the publicly available service of Amazon’s Rekognition, and also using Google FaceNet – a deep neural network model trained with a proprietary dataset. I set a matching threshold of 80% for Google FaceNet – a cutoff level above which two faces are considered a match. (Similar to the default threshold of Rekognition). Here are the results.

Wrongful Arrests Matching Table

Results Analysis

It’s important to note that facial recognition is not a Y/N type of question, but a similarity question, simply meaning, how much one image is similar to another image in percentage. The matching score in our table reflects the similarity between the 2 images, according to Amazon Rekognition and Google FaceNet. Both systems produce a similarity score between 0–100.

How about the results? All 5 cases have resulted in a non-match by both Amazon Rekognition and Google FaceNet, producing matching scores well below the 80% threshold. Simply put, the wrongfully arrested individuals on our table are not the suspects according to the facial recognition software we used.

Arrests despite a non-match

So here’s a question. If no positive match was found in those cases, how come these people still got arrested? A possible answer might be that the police relied on low-grade facial recognition systems, but I think the truth lies elsewhere.

A bigger problem

The Innocence Project estimates that the wrongful conviction rate in the US is at least 4%(!). I think it’s fair to assume the wrongful arrest rate is even higher since many innocent people are arrested but never convicted, as charges are often dropped or dismissed early in the process.

Applying only the same 4% rate to the 7 million arrests made in 2023 in the U.S., according to the FBI crime data explorer, would mean a staggering 280,000 wrongful arrests in just one year!

I believe I’ve stumbled here on the real problem, and that uncovering the root causes of this wrongful arrest phenomenon could explain why the people on our table were arrested despite a facial recognition no-match.

With the lack of comprehensive data on wrongful arrests, I turned to the statistics of wrongful convictions in the latest annual report of The National Registry of Exonerations. In 2024, 147 Americans were exonerated after serving long sentences. Among the contributing factors – 71% were wrongfully convicted due to official misconduct, and 26% due to mistaken witness identification, often overlapping.

And that, in a nutshell, tells the story of wrongful arrests involving facial recognition.

Final Thoughts

Wrongful arrests involving facial recognition are just a small part of a much larger problem of misconduct and human error.

Screaming headlines like “Facial Recognition Jailed a Man” and “Arrested by AI” may attract attention, but they miss a real purpose. When we insist on blaming the tool instead of how it’s used, we risk shifting the responsibility from humans to machines.

We should remind ourselves that after all the scary ‘Rise of the machines’ scenarios, it’s still humans who run the world.

That said, not all facial recognition algorithms are created equal. Law enforcement must ensure they’re using accurate, well-trained facial recognition models and be especially cautious when relying on low-quality images.

The real danger lies in harnessing facial recognition technology for the wrong intentions. Therefore, proper usage guidelines must be in place to prevent the misuse or abuse of the technology.

All in all, when used properly, facial recognition can serve as a powerful tool, not only for identifying suspects, but also for clearing the innocent.

Amazon Rekognition and Google FaceNet Screenshots

1. Michael Oliver

Amazon Rekognition – 7%

Google FaceNet – 29%

2. Nijeer Parks

Amazon Rekognition – 58%

Google FaceNet – 47%

3. Alonzo Sawyer

Amazon Rekognition – 12%

Google FaceNet – 30%

4. Robert Williams

Amazon Rekognition – 35%

Google FaceNet – 43%

5. Chris Gatlin

Amazon Rekognition – 6%

Google FaceNet – 0 (quality is too low)